Since 2014, Citadele Bank and Scandic Fusion have partnered to tackle diverse data analytics challenges. Now, with a shift to the cloud via Microsoft Azure, Citadele is transforming its analytics ecosystem to deliver personalized experiences, optimize operations, and drive innovation.

Citadele bank is a modern banking platform for individuals and businesses in the Baltics. Alongside traditional banking services, Citadele offers a wide range of next-generation financial technology solutions, such as opening an account with a selfie, generative AI virtual assistant Adele and making payments to mobile numbers. Simply put, it gives people more opportunities by redefining banking. In 2024, their commitment to digitalization was recognized when the bank received a Digital Banking Award from Fintech Magazine for their outstanding achievements in digital banking solutions.

The partnership between Citadele bank and Scandic Fusion began exactly 10 years ago in 2014 focusing on the development of sales and employee motivation KPI algorithm within their on-premise Oracle data warehouse. Over the years, this successful collaboration has expanded to address the data analytics needs of many areas of Citadele's business, including core banking, AML, regulatory reporting (AnaCredit, Account Register of Lithuanian State Tax Inspectorate), customer and product analytics, leasing, factoring, CBL life insurance, HR and much more.

Strive to improve customer offering

In early 2022, internal discussions marked the beginning of a new era: Citadele sought to explore cloud-based solutions also for data analytics use cases to meet the growing business need for interacting with customers by sending personalized messages, next-best product offers, and reminders through various digital channels.

To achieve this, Citadele decided to implement the Pega Customer Decision Hub (CDH) solution. It is a low-code platform designed for workflow automation and generative AI-powered decision-making. Its applications are compatible with cloud platforms, marking the start of Citadele's data analytics journey into the cloud. For this initiative, Microsoft Azure was chosen as the cloud data platform, and Scandic Fusion was selected as the implementation partner.

As with many new innovative projects, the initial scope was to create a proof-of-concept by preparing and delivering limited data sets from on-premise sources to the Pega platform via the Azure platform. Long story short, we started small, but over time, the business needs grew significantly. Read on for the details!

Evolution from XS to XXL business needs

Due to the success of the PoC, the scope expanded significantly over time, including a broader range of products, business requirements, data consumers, and the need for different cloud-based solutions.

Next-best offer

The initial business scope of the Pega CDH proof-of-concept (PoC) focused on private customers and basic Citadele products such as card accounts and loans. The primary requirement was to provide Pega CDH with data used to create business rules that generate the next best product offers for customers. The resulting offers are delivered through digital channels such as internet bank banners, emails, and mobile app push notifications.

Over time, both the list of products and the customer base expanded, encompassing insurance policies, deposits, various product offers, merchant agreements and corporate customers. The addition of new products was seamless, demonstrating the flexibility and scalability of the solution.

The solution was later enhanced to include customer reminders related to usage of products sent via the same digital channels.

Next, customer interaction data was incorporated, detailing responses to offers. Examples include whether a customer clicked on, ignored, or accepted an offer, providing insights into customer behavior.

Initially, the Pega CDH platform was the only consumer of the Azure Synapse data models. Over time, it was joined by Qlik Sense, and discussions around connecting SAP Business Objects are ongoing.

Pension Pillar 2

As of July 1, 2024, amendments to the Law on State-Funded Pensions came into force, enabling banks to offer customers the convenience of viewing their second-level pension savings directly through internet banking platforms. In addition, fund managers can now assess the suitability of specific pension plans for individual participants and provide tailored recommendations. Azure services were used to build a significant part of the solution infrastructure, starting from gathering customer data from The State Social Insurance Agency through Citadele API, processing it in Azure Synapse, and interacting with another cloud service on Azure PostgreSQL database.

Data Science

The Citadele data science team has adopted Databricks as the data platform for ML project implementation. An initiative was introduced to build a common staged data layer for multiple business use cases in analytics and data science. The goal was to establish a Lakehouse architecture with automated data flows for the Bronze layer (storing raw data in parquet files) and Silver layer (cleansed and conformed data).

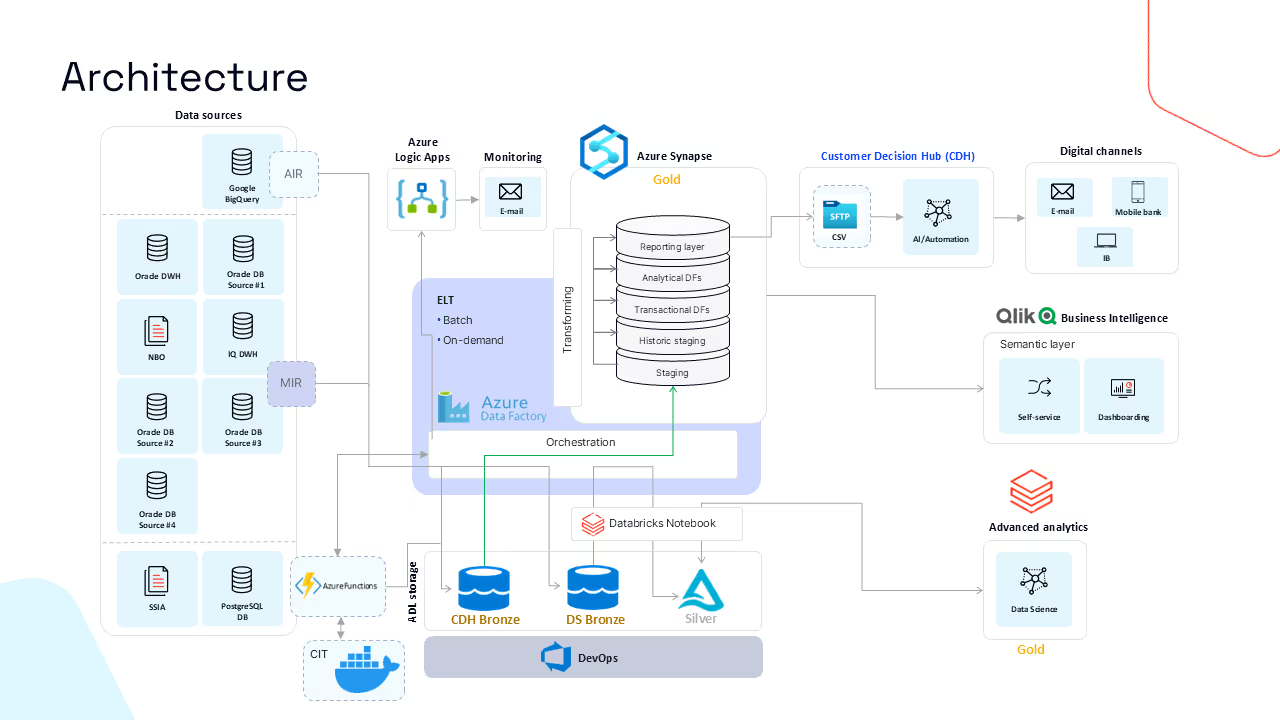

The golden solution architecture

Now that the business requirements for CDH platform have been outlined, it's clear that a robust solution architecture is essential to effectively meet those needs.

Thesolution involves managing a diverse set of data sources daily, including Oracle on-premise DWH, Temenos Transact core banking system (T24), Sybase IQ on-premise DWH, Google BigQuery, and external input files such as CSV. What's challenging is that data in these sources are available with different time lags, including:

- "As of yesterday" (T-1).

- T-2 (two days old data).

- Monthly updates.

- A near-real-time solution introduced as the latest innovation, processing T24 data every 30 minutes, 24/7.

On the data ingestion and transformation side, first, source data is primarily loaded into Azure Data Lake Storage (ADLS) as parquet files, ensuring efficient storage and performance. Analytics and transformations are applied to structure the data into the necessary Azure Synapse data models. Data from Synapse tables is aggregated and formatted into CSV files. These CSV files are then placed on an SFTP server, where they are accessed by Pega solution. The entire process is orchestrated by Azure Data Factory (ADF), ensuring smooth workflows and integration across multiple systems.

For interaction with other cloud databases for request processing and data preparation, we rely on Azure Functions. A two-way solution has been developed for the needs of the Pension Pillar 2 initiative:

- On-demand data requests - another cloud database requests data from Azure Synapse by passing a unique parameter in JSON format. The requested data is prepared in Synapse and returned in JSON format.

- Scheduled data delivery - a daily data delivery process is scheduled, where data from another cloud database is received in JSON format for further processing.

What is interesting is that the whole solution currently operates on the lowest Synapse Data Warehouse Unit (DWU 100), which has proven to deliver:

- Reasonable performance for processing and analytics.

- Cost-efficiency, both in terms of time and resource utilization.

This architecture demonstrates a robust, scalable, and efficient system for managing diverse data sources and delivering timely outputs to the Pega CHD platform for further processing, even with the latest near-real-time requirements. The overall solution architecture is outlined below:

Technical challenges throughout the journey

Gathering data from different sources often presents various challenges, particularly when dealing with diverse data formats and sources. In Scandic Fusion, the best practice for data staging is to establish a universal data loading mechanism.

For this project, the universal configuration includes:

- Automated metadata gathering to streamline the staging process and development.

- Dynamic configuration that determines whether the data load is a first-time load or a repetitive one.

- Automated creation of Azure Synapse tables to store the staged data efficiently.

- An optional column selection feature allowing users to define which columns from specific tables should be loaded.

This approach ensures consistency, flexibility, and scalability, simplifying data integration from various source systems.

At the end of 2023, an additional business need arose to start staging Pega offer data more frequently than once per day. This required a transformation from "as of yesterday" to "near-real-time" data staging, demanding a new solution to support data loads every 30 minutes.

One of the biggest challenges was related to data versioning, as the main source system, T24, consists of multiple applications. A change in just one entry of a table triggers a new entry version across all application tables. Since changes need to be displayed in time, a specific configuration table was created to log only those records that have actually changed. Another significant hurdle involved handling master data and child table dependencies. For instance, when a record is deleted in the source system, the change is only reflected in the master table, but on the Synapse side, the deletion must be reflected in all related child tables. This was addressed by loading application master table before other application tables, ensuring that child tables had access to the master data and enabling proper identification and handling of the deleted records.

Additionally, time delays posed a challenge, as each data manipulation statement - INSERT, UPDATE, and DELETE - in every script took a few seconds to execute, and these seconds quickly accumulated. The solution was to optimize performance by reducing the number of scripts and consolidating as many activities as possible into a single data load script.

Key takeaways to keep in mind

For readers embarking on similar initiatives, here are some valuable insights to consider:

First, on the technical side, if your data analytics solution architecture includes Azure Data Factory (ADF) as the ELT tool, consider leveraging these additional Azure components for enhanced efficiency and governance:

- Azure Policy - Azure Policy ensures enforcement and governance of organizational standards. For example, you can configure policies to automatically delete files from Azure Data Lake Storage (ADLS) folders after a specified time. While Azure Policy is part of ADLS, it must be configured for specific use cases. Once implemented, file deletion becomes automatic, preventing ADLS from being filled with outdated data and consuming unnecessary resources.

- DevOps - Azure DevOps is essential when working with ADF. It offers numerous benefits, including traceability, support for parallel development, and automated deployments between development (DEV) and production (PROD) environments.

- Azure Key Vault - Use Key Vault to store passwords and connection credentials securely. This approach avoids saving sensitive data openly. Key Vault also integrates seamlessly with DevOps, ensuring that the correct passwords are automatically applied when switching between DEV and PROD environments.

Next, consider the following points regarding the Integration Runtime (IR) component. During the proof-of-concept project phase, Azure-hosted IR was chosen as the execution engine for data integration processes. It is important to note that the more time integration runtime is used, the more it costs. While monitoring the overall Azure-related costs and DWU-level scale-up tests, it was concluded that Self-hosted IR might be a good alternative. Its price per hour is more than 50% lower, while performance remained almost at the same level, even for large data pipelines. By transitioning to a Self-Hosted IR, we reduced our monthly IR costs by more than 50%. However, an Azure-hosted IR might still be a suitable choice for certain use cases, particularly for:

- Scenarios requiring seamless scaling without the need for managing additional infrastructure.

- Small to medium-sized data pipelines that do not run for extended periods.

For large data volumes or long-running data staging processes, Self-Hosted IR is a more cost-efficient alternative.

Document your processes from day one. In fast-moving projects, it's common for teams to focus solely on achieving key results, often postponing process documentation to the last minute or neglecting it entirely. However, based on our experience, documenting the process during development - rather than post-factum - yields significantly better results. This approach allows teams to capture technical nuances while fresh, leading to clearer and more accurate documentation and shared understanding among all involved parties.

Finally, effective communication is key, particularly in projects involving multiple stakeholders. During the development of the Pension Pillar 2 project, several parties were involved, including Citadele IT, Scandic Fusion and The State Social Insurance Agency . Despite regular follow-up meetings being organized by some groups, the information flow was initially not seamless, resulting in occasional misunderstandings.

To address these challenges, two key activities significantly improved project organization and enhanced the quality of deliverables:

- Common MS Teams channel - introducing a shared Microsoft Teams channel provided a centralized platform for discussions and quick decision-making. This helped streamline communication and foster collaboration among all parties.

- Live demo sessions - the most impactful improvement came from organizing live demo sessions. These sessions allowed teams to present their technical solution to one another, enabling mutual understanding, reducing ambiguities, and driving progress more efficiently.

Benefits that have been recognized

Since starting with a proof-of-concept in 2022 for the Pega project, Citadele's cloud usability has significantly expanded by 2024, both horizontally and vertically. The Pega solution has evolved, and entirely new business initiatives have been successfully implemented. This expansion has been made possible through close collaboration with Citadele's IT department, which has enabled fast, effective decision-making while avoiding unnecessary bureaucracy.

The journey to the cloud has brought Citadele numerous benefits, including:

- Flexibility - seamlessly expanding infrastructure as needed.

- Scalability - easily increasing performance when necessary (e.g., adjusting Data Warehouse Unit (DWU) levels, running tests, evaluating effects, and scaling back as needed).

- FinOps best practices - maximizing value from the cloud to drive efficient growth, including optimizing price performance and purchasing reserved capacity (Synapse DWU level) for cost saving.

Looking ahead, the next step in Citadele's cloud journey involves an even broader transformation - from "as of yesterday" to "near-real-time" data staging - unlocking new possibilities for Synapse data consumers.

“Scandic Fusion has played a significant role in helping Citadele advance our data analytics journey to the cloud. Their support has enabled us to deliver more personalized and timely customer experiences while gaining the flexibility, scalability, and cost-efficiency needed to meet our growing demands. Their expertise and collaborative approach have made this process smoother and more rewarding, and we value their partnership greatly.

Guntars Andersons, Head of IT at Citadele Bank

Parting thoughts

For organizations primarily relying on on-premise systems, even a small business need with the potential for growth can make the journey to the cloud a wise choice. The cloud offers an environment for rapid innovation while providing flexible resources and cost optimization.

As cloud technologies evolve constantly, it's worth remembering that what may not be possible today could very well become achievable tomorrow. Embracing the journey to the cloud is not just a strategic move - it's an exciting and rewarding adventure!

.png)

.avif)